Thinking about how we use evidence in user-centred design

Working in a large government department like the Department for Work and Pensions has created a few challenges about how to bring together traditional types of evidence-driven decision making and a new focus on delivering digital by default services.

As a user-centred design team we work closely alongside teams of government analysts who have been at the heart of how government departments have operated for a long time.

Different language, same focus on outcomes

I’ve found that we often speak a very different language while talking about exactly the same things.

Sometimes we speak about these things with a different emphasis, but it’s important to acknowledge that policy, operations and service design in government are broadly all about delivering things that solve problems for people.

We can call this changed outcomes, or behavioural change, but it’s really about what people are able to do next. It’s what the service helps them do, anything from finding a job to planning towards their retirement.

Moving an analyst culture towards continuous learning

The challenge for me is how to bring together the more traditional government functions like social research and behavioural science alongside design research and a user-centred design process embedded in a digital delivery culture.

I think that we’re missing an opportunity. It’s a misconception to think that we need to change how everyone in government works. Instead, we need to encourage people to work together more to solve our biggest, most challenging problems.

Wherever you find an analyst culture that’s dependent on evidence and certainty I think there’s a real opportunity to move people towards continuous learning by doing, but also acknowledging that evidence is still an important part of the process.

Having evidence is never a bad thing, good design is about constraints

Good design is always about constraints.

For us these constraints are about policy intent, or understanding that government should only do what only government can do, and how we need to operate services at scale, and the technology, and security considerations that come with keeping people safe, and their personal data secure.

The important thing is understanding when to apply constraints. In doing this we need to create space for learning by doing as part of a creative process.

Just to really emphasis that the important thing here is learning. It’s how you bring evidence into a process at the right times to provide appropriate constraints to the problem you’re trying to solve.

It’s about doing the best possible job of framing the problem that you’re working on. Providing the right focus for a delivery team, at the right time.

This is where I think designers are important. They should have the ability to find the absolute focus on framing the right problem to work on supported by user research.

The following two examples are a framework to consider how we apply evidence as a constraint to service delivery. I’ve started with an example of how not to apply evidence to service delivery – the sad path – and then will talk you through what I think the happy path looks like.

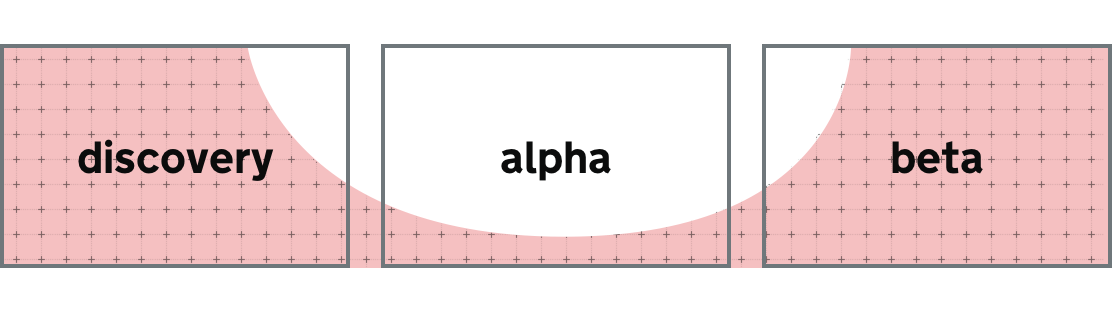

Evidence and service design: the sad path

All too often in agile delivery the way we use evidence is the wrong way round. It looks something like this.

In the sad path discovery is about understanding requirements not problems.

We’re too slow to go out and talk to people to understand user needs and it takes too long to bring existing evidence into the room to help shift the emphasis away from organisational thinking.

Alpha is then about designing business requirements not iterating to learn about a problem.

In this situation the amount of design iteration a team can produce is constrained by the policy and understanding of how things need to operate.

The team makeup here is often a sign of when evidence is limiting the effectiveness of an alpha. If you find a team is heavy on business analyst roles then this can be a sure sign we’re trying to map solutions to constraints too early in the process.

Finally, in the sad path beta is about delivering the project requirements rather than continuous improvement. We don’t focus enough on measuring outcomes – our focus on evidence is reduced quickly after initial delivery – so we don’t know how well our service is meeting user needs and where the opportunities are to make improvements to how well things really work for people.

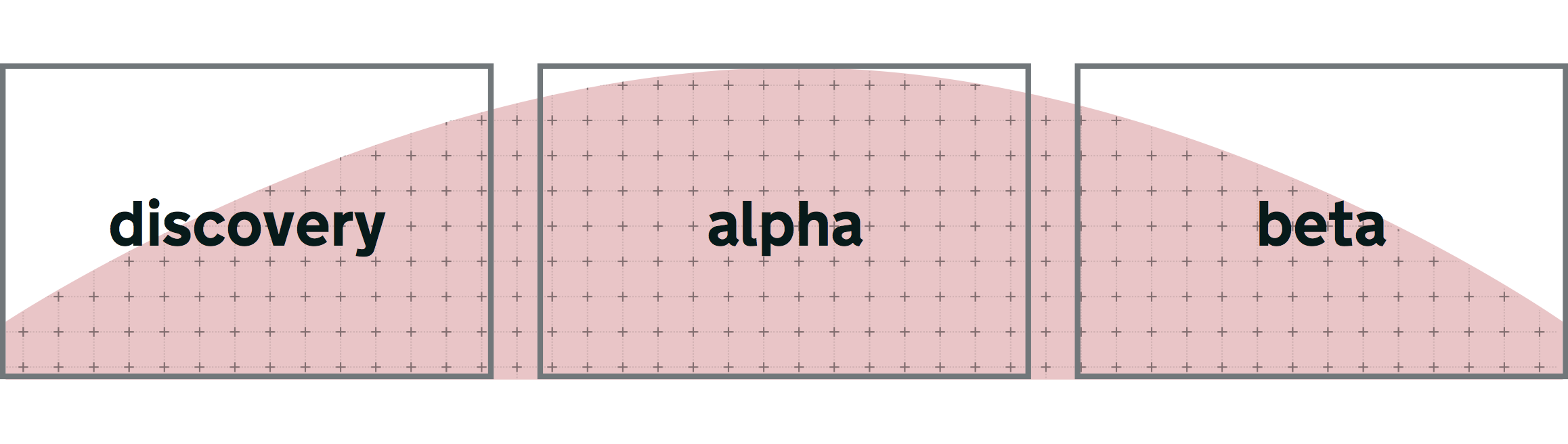

Evidence and service design: the happy path

This is the happy path. It’s how I think we need to be approaching using evidence for digital by default service delivery.

In the happy path discovery is about bringing all the evidence we can find into the room to help us understand the problem.

This should include organisational data, anything that we already know from behavioural science, and everything we’re learning from the team doing user research together to understand user needs.

The things we already know can help identify the groups of users we need to talk to first and show us where to start. We’re always learning so this also continues to shape what we think of as ‘evidence’.

Alpha is then all about learning by doing.

All the evidence we’ve collected is still in the room, but the only constraint in alpha should be the problem.

The problem is best defined as finding ways to meet the user needs we’ve identified in discovery.

The goal is to iterate wildly. This means we test different ideas with real users to see what works and what doesn’t.

Sometimes this means we prototype to learn and test things that would not be a viable final solution.

Towards the end of alpha, teams need to think about the impact of constraints, policy, operations, technology and what a viable product might look like as they start to deliver a scalable product.

In the happy path beta is all about delivering real working software and seeing how well it works for people.

As we move towards private and then public beta we start designing and improving a service using live performance data. This means we focus on a new type of evidence-driven decision making with key metrics helping us use hypothesis-driven design to prioritise product improvements. Our focus on bringing evidence into the room steadily increases as we move towards a fully live service.

Challenges going forward

The default position in government is usually that the more complex the problem, the more business analysis we need to do.

I think the challenge is to look at that the other way around: the more complex the problem, the more creative space we need to create different solutions for testing to see what really works for people. We can then shape our delivery around those solutions, understanding constraints as we continue to transform the underlying technology and platforms we’re dependent upon for delivery.

It’s clear as we deliver more services that we need to take a more evidence-driven approach to measuring what happens. Looking at key metrics to understand if services really deliver value against how we prioritise what to build and deliver in the first place.

The happy and sad path examples are very much up for discussion. I would be happy to iterate these, and learn more about how others are bringing together different ways of working.

This is my blog where I’ve been writing for 20 years. You can follow all of my posts by subscribing to this RSS feed. You can also find me on Bluesky and LinkedIn.